Project Insight

An internet browsing data ingest tool to counteract OPSEC leaks by tracing the amount of data being collected by third-party companies.

May 13, 2023

For my senior capstone project, I worked collaboratively with a team of 4 other cadets in creating a tool that would provide commanders of units to see how their unit's internet browsing data could potentially create an operational security risk based on the amount of data that is being collected by third-party companies.

Description

Open source and commercial data increasingly reveal individual patterns of life which, when applied against military personnel, can pose operational security and readiness concerns.

One of the central mechanisms underlying this threat to the force is digital tracking.

The project will produce a solution that provides commanders of camps, posts, and garrisons with an understanding of their organizations exposure to tracking and inform mitigation decisions.

This project will implement existing research into a turn-key solution for non-technical users based on browsing history.

The solution will output a well formatted report that includes quantitative measures of exposure to trackers and the efficacy of standard mitigation strategies.

Technical Details

Self Hosted

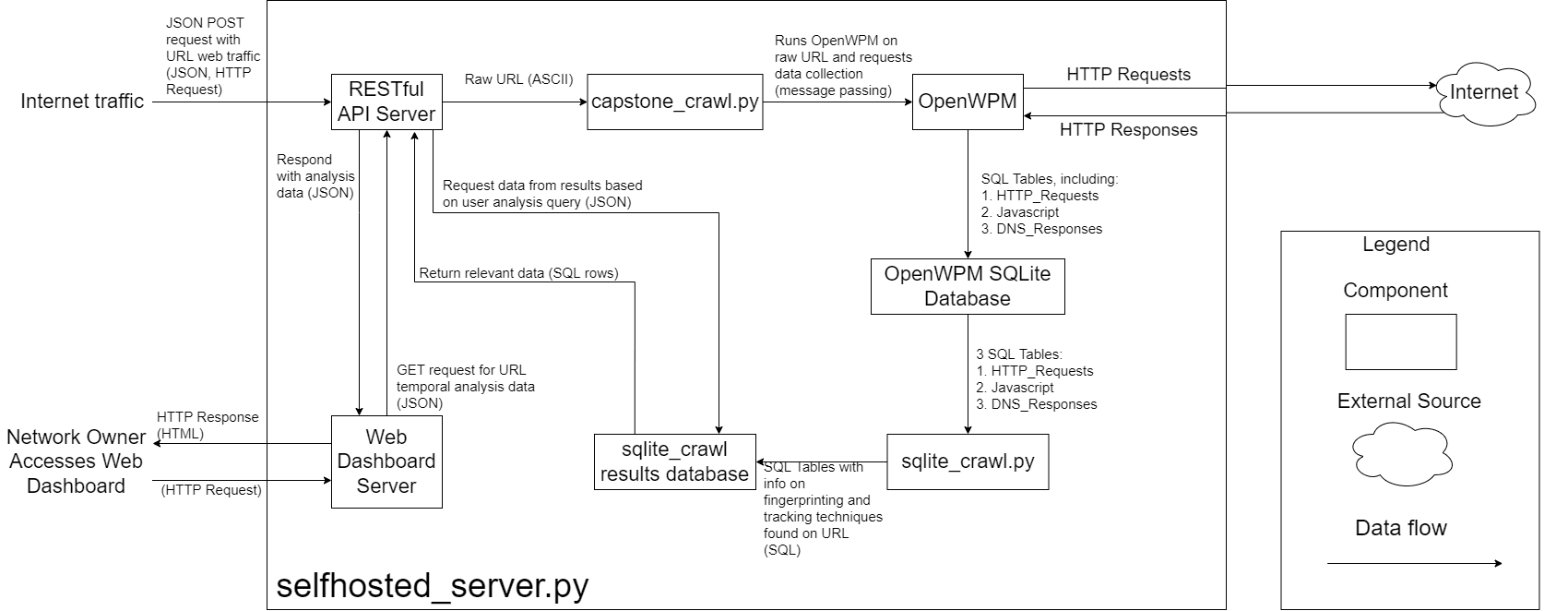

The self-hosted variant of Insight was meant to run on a network, with a router forwarding http/s requests to the RESTful API endpoint in JSON format.

Once posted, the following occurs:

- The ingest engine takes the request and places it in a queue to crawl

- The asynchronous crawler uses OpenWPM to crawl the website

- Crawler sends information to SQLite database

The admin of the network can then use the web dashboard (Grafana) to view the data. The following occurs after the admin logs in:

- The web dashboard sends a request to the RESTful API endpoint

- The API queries an intermediate layer to get the data from the SQLite database

- The intermediate layer queries the SQLite database

- The SQLite database returns the data to the intermediate layer

- The intermediate layer parses relevant data and returns to the API

- The API returns the data to the web dashboard

Cloud Hosted

The cloud-hosted variant of Insight was designed to serve a simple frontend to individual users to see how safe their browsing habits are.

The ingest engine is the same as the self-hosted variant.

Technical Issues

Database speed

An issue we faced during this project was live data collection and database management. Initially, we used Pandas to collect the data and parse it, but we quickly realized that this was not a viable solution for live data collection.

We then switched to using Polars, which allowed us to store the data in a database and query it as needed.

Memory watchdog

A feature that we implemented was a memory watchdog that would monitor the memory usage of the crawler and restart it if it exceeded a certain threshold.

This feature was merged into the OpenWPM repository and is now available for use by other researchers!

Future work

Currently, future work is undefined as the capstone was taken over after the semester ended and cadets no longer improve this. However, the concerns regarding OPSEC are still relevant and could be expanded upon in the future.